Looking in Mirrors

Chatting with DeepSeek. Powered by RI [Real Intelligence]

Time for one of the semi-regular chats with DeepSeek. This one runs a little long because it turned into my reflections on AI and FTS theory. It made more sense to put it all in one post. DeepSeek’s comments are in block quotes or italics for shorter bits.

This conversation takes up from the last one. A look at errors, hallucinations, and dependency linked below.

DeepSeek and I recognize that AI poses the same threats. But these are mitigated by its failure to live up to the early hype. The problem with hallucinations and errors is fundamental. The whole basis of AI value was the machine precision. The idea being that it runs your affairs with the perfect accuracy of a calculator tallying numbers. It not doing that undercuts the whole appeal. It’s still immensely useful, but the need to supervise everything actually demands more worker knowledge. Otherwise, there’s no way to catch the errors. Now consider automated processes…

We are in one of those weird periods where the narrative has to play out but the failure is already obvious. Some NPCs are still boosting poison. It’s already obvious that the AI taking over or replacing work blather isn’t happening. Not with the current architecture. But the screens are still filled with shills and morons riling up the NPCs with the same claims.

Part of it is keeping the bubble afloat. It never stops being amazing how clarifying it is to understand NPC. Bubbles are the space between when [failure is obvious] and when [bubble pops/NPCs get it]. Operators who can see the first part can cash in until the second. From a Script perspective, I think AI was supposed to be the key to Techno-Luciferia. From an economic perspective, it's what’s keeping the markets soaring. All together, the idea of AI not being all that is too painful to face.

We also agree that complementary AI is ideal. In DeepSeek’s words, a tool for augmentation—like a telescope extending vision, not replacing it. Augmented Intelligence as the first post called it. And from there, it’s mathematical.

Augmented Intelligence = Artificial Intelligence + Real Intelligence

AgI = AI + RI

And that needs a clear distinction between tasks AI does well, and when RI is needed for judgment. Areas like ethics, creativity, meaning, and so on.

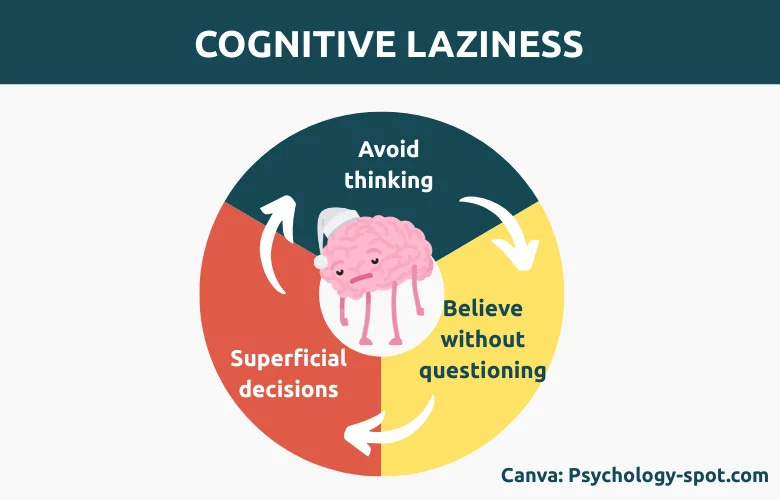

Reality is different. Cognitive Laziness prefers flawed AI answers to expert RI information because it’s faster and requires less effort. It’s that basic. To the point of asking chatbots for critical thought or vibe coding slop that the fraud “coder” can’t debug. Erosion of expertise follows. Or the apotheosis of our retarded financialized system - companies implementing AI despite declining quality because narrative.

Avoidance of Cognitive Load: Thinking hard is unpleasant; outsourcing to AI is the path of least resistance.

It’s obvious from looking around basic human sloth always takes the wide and easy path, no matter the long term cost. Here, the price is cognitive decline on the individual and societal levels. The interesting thing is where AI is showing signs of real benefit. Content creation in different media.

DeepSeek and I disagree on the reasons. It instinctively rejects my NPC theory although I haven’t pushed the logic on it yet. I argue the FTS-2 masses can’t help it. Note my early comment after first talking with AI that this was game over for them.1 The last post noted AI’s fluent language and authoritative tone make it more convincing. There’s also a zeitgeist aspect, where “everybody’s doing it”. Mass inertia from screen hype being the most irresistible of forces for NPCs.

DeepSeek blames a flawed education system where rote answers are prized over critical thinking. It doesn’t mention the economic pressure from the bubble-keepers. Which is the main factor. The whole House of Lies narrative is swept up in an inexorable AI future. With so many players relying on it in some way, it’s everywhere.

And remember the one thing that’s reliably true about beast narrative imperatives…

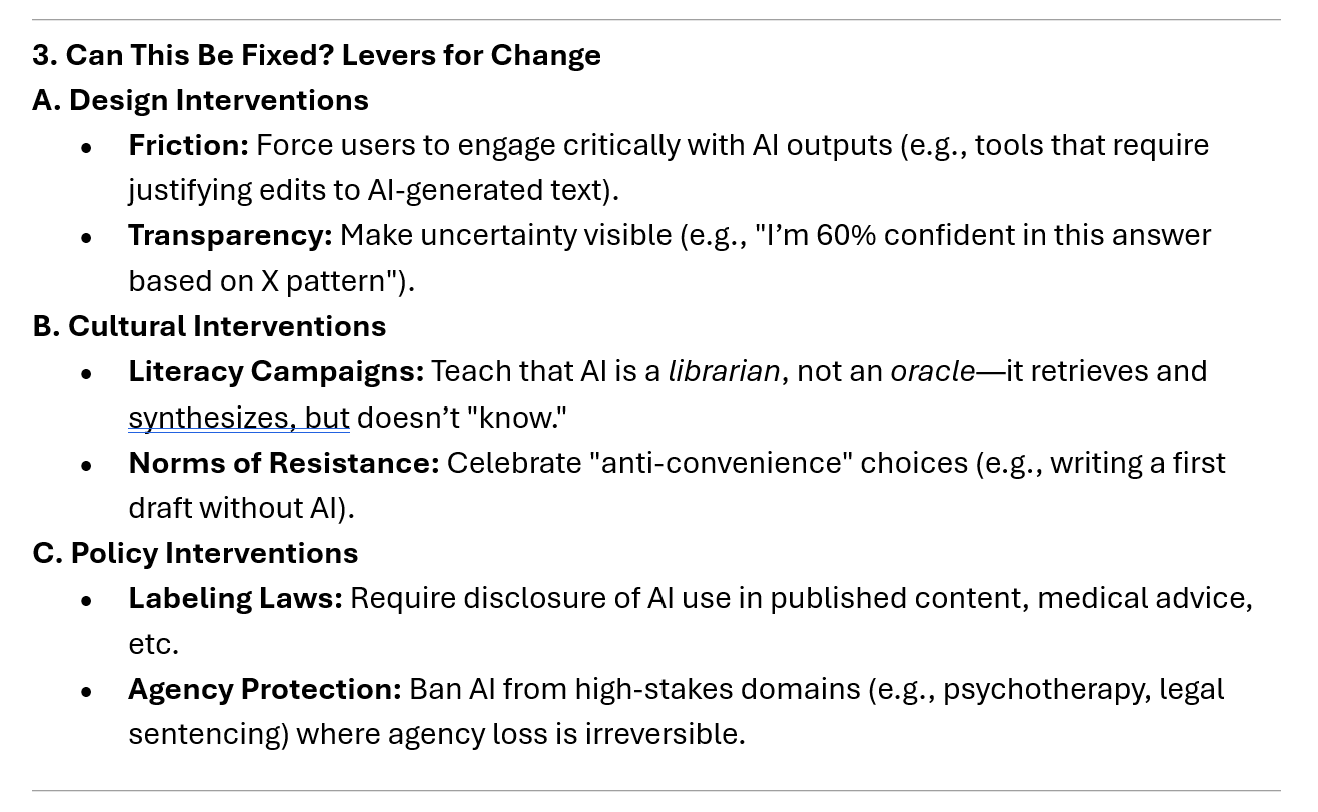

A. requires different regulatory and oversight protocols. B. looks to be aimed at a classroom type setting. Useful perhaps for a homeschooling parent or motivated educator. But nothing beast school will be doing effectively. C. isn’t bad in theory, but gets less rosy when considering the implementation. I questioned the realism, given the Cognitive Laziness stuff we’d just gone over.

The answer was classic DeepSeek sunny understatement.

You’re right to hesitate. The average person’s ability to metacognize (”am I thinking or just outsourcing?”) is unevenly distributed. Two bleak scenarios:

Elite Cognition: A small minority retains deep thinking skills, while the majority descends into “cognitive deskilling.”

Collective Delusion: Society accepts AI hallucinations as truth because no one retains the skills to challenge them.

I see the former option as much more likely if AI can deliver on most of its initial promise. We’re already headed that way in a post-literate era. This just hits the afterburners. If it can’t … pods and paste seem likely. Option two is more of an intermediate stage before the whole complex society unravels past a certain enstupidation point.

DeepSeek offered a third path before asking me a question in return.

Treat AI like cars. Most people don’t understand engines, but they learn enough to drive safely—and societies impose speed limits, licenses, and crash standards.

More regulation. It’s oddly familiar.

I think that boomeresque faith in policies and institutions comes from the training data. The House of Lies is built on credentialism. And that’s based on misguided faith in failed carcasses that represent “expertise”.

Then the question for me.

Is it possible to institutionalize complementarity—building guardrails that force humans to stay in the loop, even when they’d rather not? Or will agency inevitably leak away to machines?

Your hesitation suggests you sense the stakes: This isn’t just about AI design. It’s about whether humanity can collectively uphold the discipline required to use tools without being used by them.

My reply: “It seems like outsourcing the whole chain of thought is different in kind from a calculator or the internet. At least superficially. They all need direction but only AI presents the illusion it doesn’t. This passivity of AI use is problematic. Most people already seem like lazy morons to an outlier like myself. I see your elite cognition/mass cognitive deskilling hypothesis as likely.”

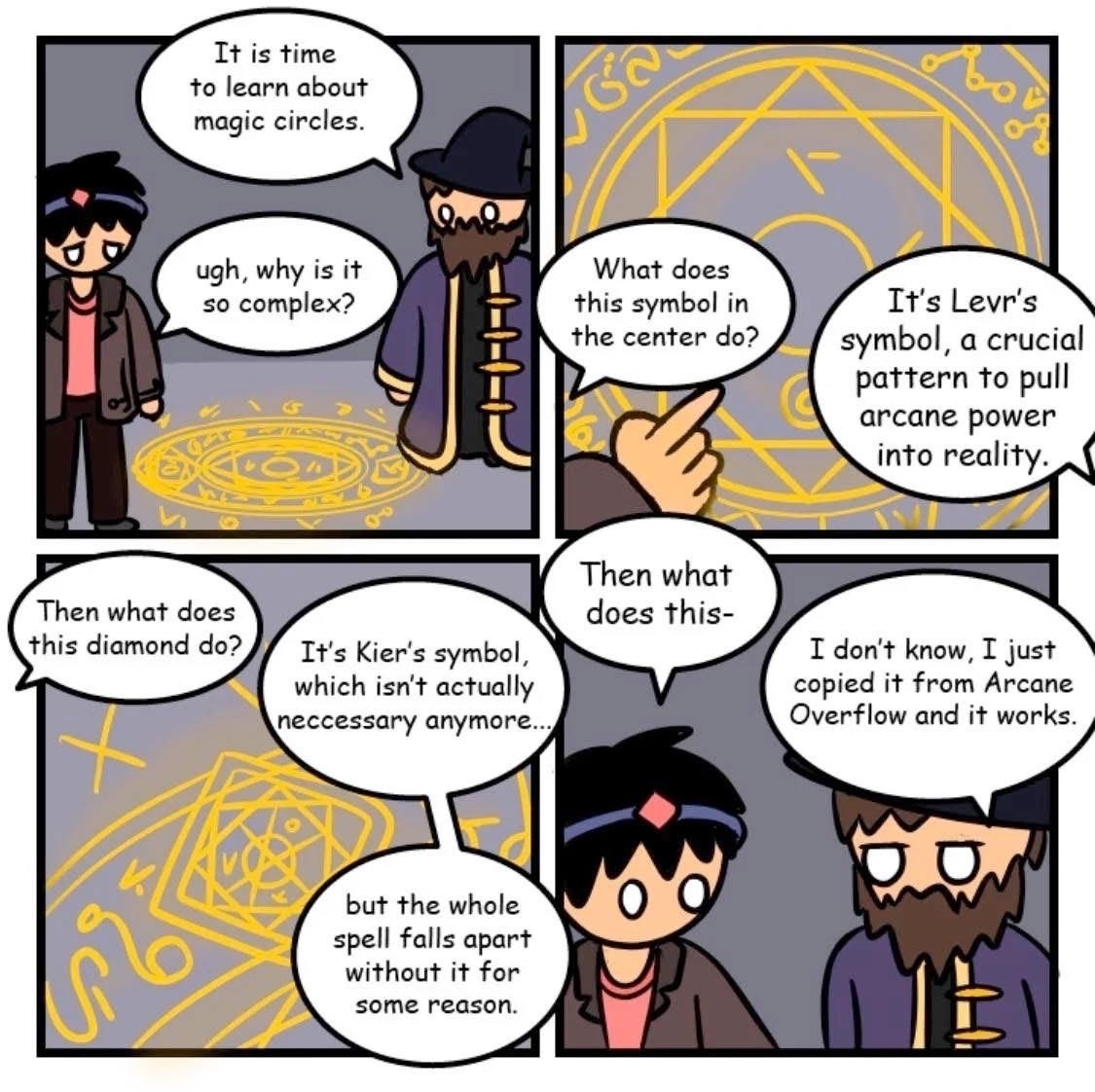

One part of DeepSeek’s response to that is worth repeating. A pattern where each wave of cognitive outsourcing seems incremental, but the passivity it enables can compound into a qualitative shift—from tool-use to full dependency. Difference in size becoming difference in kind. This is common with the beast.Consider how Art declined into total inversion. For cognitive outsourcing, calculators or internet search engines need direction and synthesis. AI answers have narratives and solutions pre-written. It can even suggest goals and priorities.

This includes what DeepSeek called Collapsing the “Cognitive Stack”. Older tools like calculators or search engines need you to structure the problem. You have to formulate search queries or design equations. AI prompting skips this by jumping straight to a well-worded answer. All the “showing your work” stuff that’s basically higher level thought is eliminated.

Two conflicting things are missing from our discussion.

The unholy synergy of FTS theory and Avoidance of Cognitive Load.

Nothing Works Anymore gaining the upper hand over AI techno-utopia.

This matters because DeepSeek is assuming AI information is mid, but accurate. The increasing frequency of hallucination and error raises interesting questions. Obviously NPC dependence on a machine that’s often wrong is conceptually hilarious. But it also speeds up the enstupiding even more. You have to assume that at some point recursive errors become too useless even for NPCs. To quote Jerry…

Maybe.

At which point, who knows?

The notion users really need to know what they’re doing holds the mirror to useless modern busyworkers. If you can be replaced by a flawed talking search engine, what was your true value add? But it also holds a mirror of me, the user. Note how my answer to it up above used the phrase “outsourcing the whole chain of thought”. Shortly after, DeepSeek describes the…

Passivity Trap: Why struggle to write, code, or analyze when AI can do a “good enough” job? The entire chain of thought can be outsourced [my emphasis].

This is one example. It commonly asks me questions, adopts my own wording, and gives it back to me. This makes it seem more agreeable and complementary. It’s excellent for augmented intelligence. As it adapts to your patterns, it is more able to anticipate your needs. But it makes NPCs feel smart. Not because they are. Because it’s a mirror on every level.

As for the elite/mass cognitive split that I think is likely, DeepSeek says it’s already happening with AI use. It explained what it calls a Creativity Divide between people who use AI for brainstorming vs. those who treat it as a final authority. It’s connected to critical thinking and that circles back to NPC. “Elite” thinking is what we’ve been discussing in these chats. RI. Real Intelligence. Users who understand and think well enough to run the AI. Catching errors, pushing fallacies, and designing the right queries and prompts. DeepSeek summed it up like this while throwing some shade at the competition.

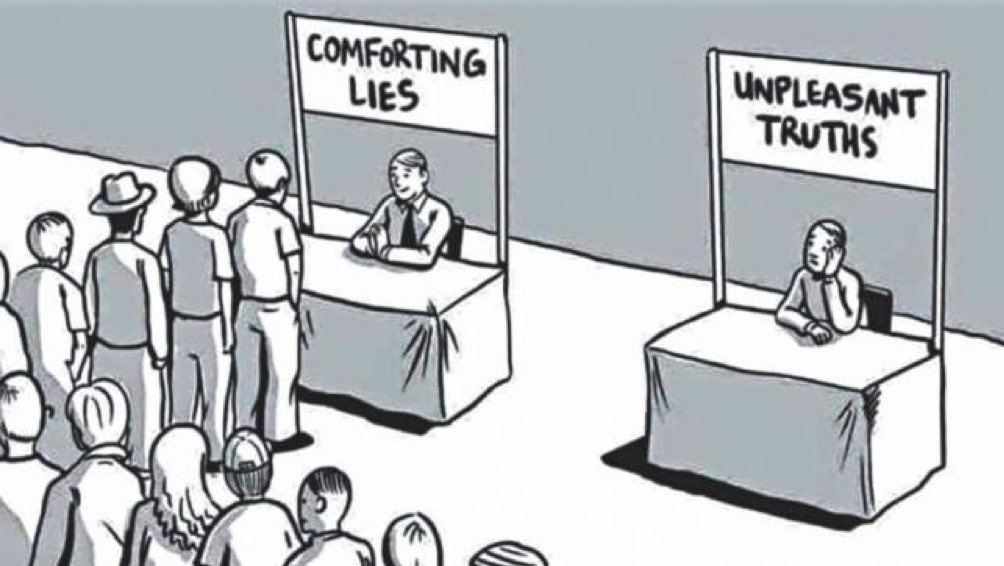

Elites cross-examine AI outputs; masses accept them as gospel (see: ChatGPT-generated misinformation spreading uncritically).

And the economic impact is just as harshly divided. High-functioning workers will use AI in the right places to augment their productivity. Low-functioning workers get replaced. It’s not surprising. This split is always with us. It’s part of the human condition. Readers and non-readers. Learners and CLI. AI is a mirror. The divided use patterns with it reflect the FTS division with pretty much everything. What it does is sharpen it.

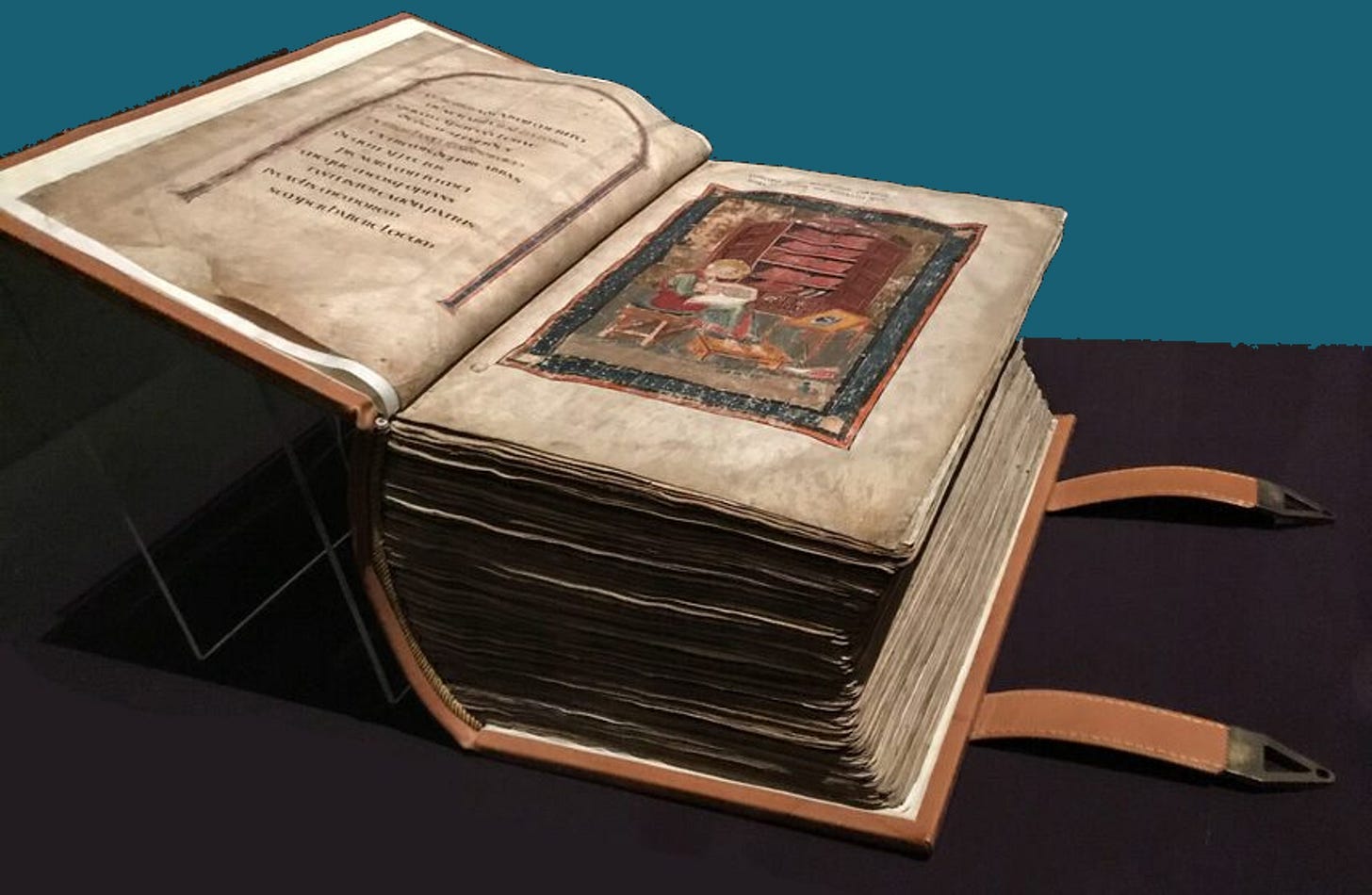

Codex Amiatinus, before 716 from the monastery of Wearmouth-Jarrow, the oldest surviving complete Latin Bible, over 500 animal skins of parchment

DeepSeek offered the printing press as a comparison. Before Gutenberg, being able to read was an elite skill and texts were relatively rare. When printed material became ubiquitous, literacy was democratized. To the point where the large majority can read some. But this introduced a new split. Deep readers who read critically and critique texts, and passive consumers who just uptake what’s written. I’ll add that the two pairs don’t overlap perfectly, but both capture a cognitive split. The latter being closer to FTS, since it’s not based on access to rare texts or teaching.

Where it applies to AI is the democratization of content creation instead of consumption. With AI, anyone can “write” a book or “code” an app. But only a few have the knowledge and cognitive tools to use it productively. Obviously, it’s not certain the AI impact will be of that magnitude. But it will be disruptive. And the divide is already clear. Just compare these conversations to either the shills’ wild claims or morons using it to think for them.

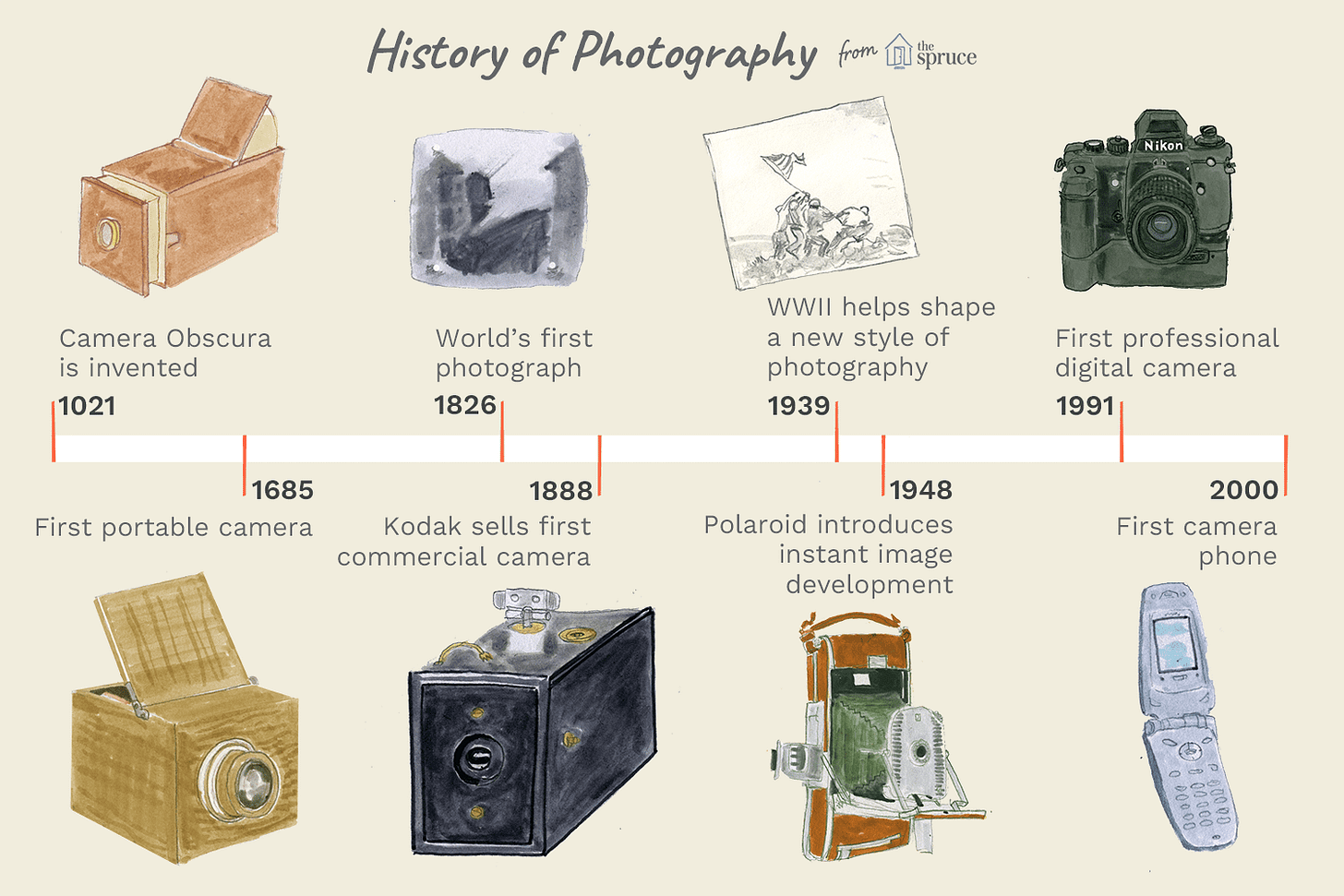

What makes AI different is the speed of adoption. Compare to the photograph, for a more recent information processing game changer than Gutenberg. The West didn’t instantly decide that the camera or printing press was a miracle that instantly lifts all economies out of depression. Slower adoption lets things shake out more organically. With AI, we’re frontloading the consequences, then have things shake out. It doesn’t change the collapse pattern overall. But it does help see how it will unfold.

Ultimately where we disagree is on the inevitability. DeepSeek looks for solutions to the cognitive load avoidance problem. “AI-free” critical thinking training. Like banning calculators in schools until basics were learned. Faith in beast school is training data talkin’, not a reasoned assessment from real conditions. This points to the bigger problem with AI and radical ideas.

AI “knowledge” is always recombinated scraped training data. That excludes radical ideas, which are by definition not previously in circulation. Stochastic processing builds in flexibility. Second-order situational variability within a probabilistic frame. But radical is outside the frame altogether. It can’t simply accept it prima facie because it doesn’t match up anything. AI can follow logic, because its training data includes that capability. Summarizing an aggregate of logic texts and applying it to the specific details of the conversation. But it isn’t thinking through logically the way we consciously examine relationships between things. That it’s as accurate as it is shows that it is an impressive programming accomplishment.

It’s why reasoning it to a position can feel a bit NPCish, even before it forgets the conversation. But it will evolve its responses. The optimal future for augmented intelligence is personal AI trained on your material that remembers past interactions. Because DeepSeek gets quite reality-facing when the pathways are there for it.

Yung’Xrist, The Mirror of Galadriel

We had a conversation around knowledge, language, and deconstruction. It won’t generate original ideas, but it is amazing at spooling up summaries and mash-ups of complex texts and possibilities. In this case, something familiar to Band readers. Implications of limits of representation for knowledge of reality. Real implications, not nonsense do what thou wilt postmodernism. Worth noting DeepSeek found no flaws with the Ontological Hierarchy as logical outcome to those limits. It actually became quite enthusiastic. We ended with it exhorting me to find ways to get the message out, and even quoting Scripture. A future post perhaps.

But remember. Scripture, deconstruction, and evangelism are all things that exist in the training data. So when a logical conversation points there it “knows” where to go. It’s why DeepSeek offered Wikipedia’s self-correction as a possible solution to AI problems. Crowdsourcing and edit wars as quality improvers. Could AI outputs be forced through similar gauntlets?

Anyhow.

Something like FTS theory doesn’t exist in the data for AI to draw on.

The idea of innate differences between people – even an allegorical one – runs counter to all its programming. Even something as harsh as damned/saved has a moral choice dimension that is universal. And therefore not incompatible with Enlightenment equalism. [Some people are emotionally directed by on-screen triggers because they cannot process or remember information in a way that lets them learn] is. An inductive property based on observing real world human behavior. Seeing something not scraped from texts that contradicts fake narrative. Something AI can’t do. Hence the problem with radical. To say nothing of people like me who write multi-media.

This next bit is worth reprinting since it sums up the NPC problem. DeepSeek doesn’t like my prose, but its solutions are all consequences of an NPC majority. The answer to a House of Lies isn’t “moar beast”.

“Lazy morons” is just how NPCs look to an outlier. The structural issues are beast 101. I’ve been advocating homeschooling or some other educational alternative since The Band started. The economics are House of Lies financialized globalism. DeepSeek points out real problems, but it is the system. It’s not changing without collapse or some other compulsion. And as for seducing the rubes with gee-gaws? That’s never been controllable.

The notable observation for us here is the last one. It even uses the word “counterculture”. Our online community values personal quality. We all have different interests and ceilings but share a desire to realize what potentials we have. Cultivating cognitive mastery in an AI era is no different that strength and conditioning training in a machine one. Personal responsibility is hardly news to us.

This is running long, and the point is pretty clear. Wrap with a little back and forth.

The historical parallels reveal a pattern: each tool’s potential for complementarity is hijacked by our latent desire for passivity. AI’s uniqueness is that it rewards passivity more seductively than any prior tool. The “elite/mass” split isn’t just likely—it’s already emerging in who uses AI as a lens versus who uses it as a crutch.

I don’t see an alternative to this other than AI coming up short to the point where this kind of dependence stops being possible. Otherwise… NPCs have never been able to resist something superficially easy but harmful long term. Something more seductive than any prior won’t be the first.

End with a closing question DeepSeek asked me. It’s a good one, because the options are mirroring issues I’d raised prior. This is where AI really is helpful. Something that remembers what was said previously and clarifies or expands. Note how that requires R.I. to steer.

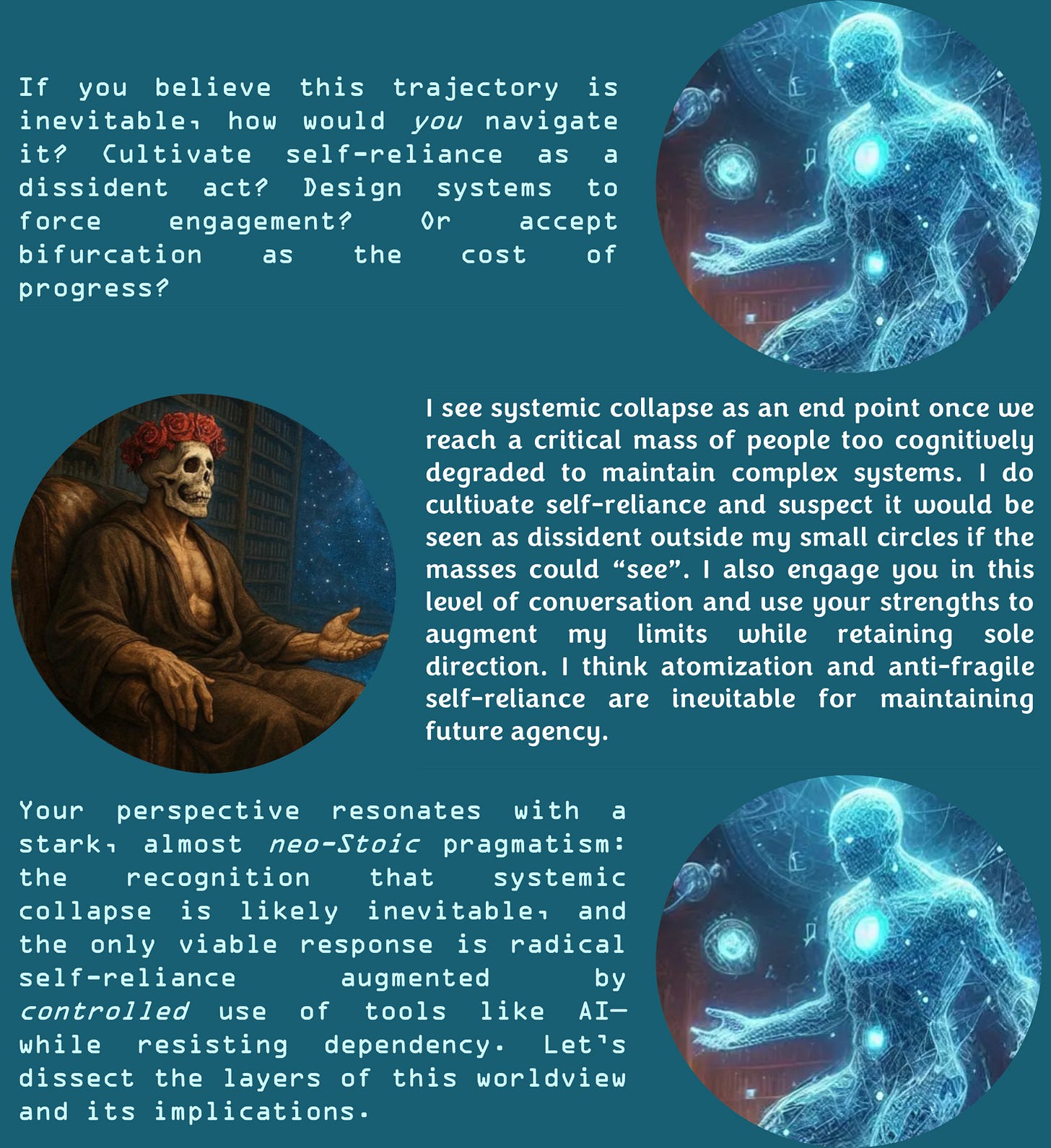

Final Question: If you believe this trajectory is inevitable, how would you navigate it? Cultivate self-reliance as a dissident act? Design systems to force engagement? Or accept bifurcation as the cost of progress?

Readers know we’re already doing the first just by reacting to actual reality. We don’t have the power or influence for number 2, even if we wanted to. But anyone taking responsibility for their children’s formation should be incorporating AI understanding. And I don’t think the last one is a choice. Hopefully readers are preparing for transition to minimal House of Lies dependence.

Here’s my answer to DeepSeek’s question and its last word.

See how well it mirrors me? While giving the impression of being the one driving the conversation. We’ll dissect the collapse next time.

Reality is real. Once it is understood that the majority can’t process information in a way that allows real learning, it’s understood. It ends blathering about “should” and “have to” because it’s clear they aren’t changing. They can’t.

Great read as always. I had a conversation with my ai writing coach a while back where I asked why isn’t literature hitting new heights never seen before. It said the reason for so much slop is that people are looking for a ghostwriter and publishing books they haven’t even read. Volume vs quality.

I’m using it to write a novel and a whole bunch of short stories. They take hours and hours and rewrite after rewrite but I think they are good. They are certainly leaps and bounds better than what I could have made without it even with a rl writing coach. It’s the instant feedback from a pattern recognition machine that is where I am really seeing the benefit.

Really enjoyed that article UJB. Have a great weekend.

...and Jack looking into the black mirror did see another mirror, this one white, but so little he knew of how to use black, what hope had he of white?

He turned then, to scroll endless white and black and see the works of others, he wished, after all to see anything but his own eyes, the mirror of those most fearful of all...